Understanding Logit Scaling

Any model with the word "logit" or "logistic" in it uses something called logit scaling. Logit scaling is a beautiful thing! This post describes how it works and how to interpret it.

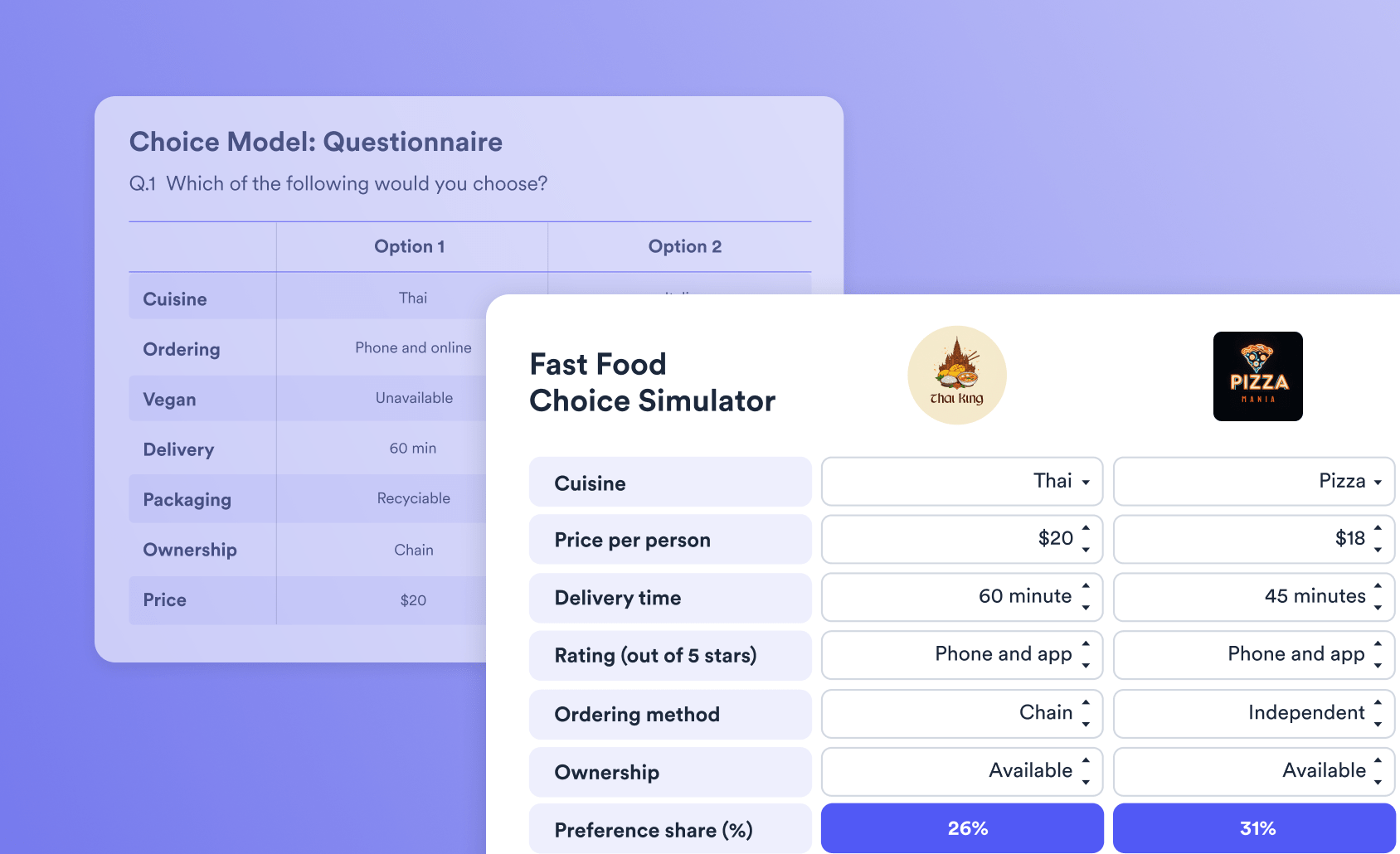

Example: choice-based conjoint analysis utilities

Consider the utilities plot below, which quantifies the appeal of different aspects of home delivery. If you hover over the mouse plot you will see the utilities. For example, you can see that Mexican has a utility of 4.6 and Indian of 0. These values are logit scaled.

Converting logit-scaled values into utilities

When things are on a logit scale, it has a couple of profound implications. The first is that we can compute probabilities of preference from the difference. For example, we can see from the utilities that this person seems to prefer Mexican food to Indian food (i.e., 4.6 > 0). The difference between 4.6 - 0 = 4.6 (we are starting with an easy example!), and this means that given a choice between Indian and Mexican food, we compute there is a 99% chance they would prefer Mexican food. The actual conversion from logit-scaled values to utilities is a simple formula, which is easy to compute in either Excel or R. Note that there is a minus sign prior to the value of 4.6 that we are evaluating.

Comparing Mexican food to Italian, we can see that Italian food is preferred and the difference is 0.5. As the difference is smaller, the probability is closer to 50%. A logit of 0.5 translates to a 62% probability of preferring Italian food to Mexican food.

Summing logit-scaled utilities

Things get even cooler when we add together utilities and then compute differences. Mexican food at $10 has a utility of 4.6 + 3.3 = 7.9, whereas Italian food at $20 has a utility of 5.0 + 1.0 = 6.0. This tells us that people prefer Mexican food if it is $10 cheaper. Further, as the difference is on a logit scale, we can convert the difference 7.9 - 6.0 = 1.9 into a probability of 87%.

Percentages versus probabilities

Now for some of the ugliness. So far I have described the data as being for a single person, and interpreted the logit scales as representing probabilities. In many situations, the underlying data represents multiple people (or whatever else is being studied). For example, in a model of customer churn, we would interpret the logit in terms of the percentage of people rather than the probability of a person. Why is this ugly? There are two special cases:

- In many fields, our data may contain repeated measurements. For example, in a typical choice-based conjoint study we would have multiple measurements for multiple people, and this means that the logit is some kind of blend of differences between people and uncertainty about a person's preference. It is usually hard to know which, so common practice is to use whichever interpretation feels most appropriate.

- More modern models, such as hierarchical Bayes, compute logit-scaled values for each person. This is good in that it means that we can interpret the scaling as representing probabilities about individual people. But, a practical problem is that the underlying mathematics means we cannot interpret averages of coefficients as being on a logit scale, and instead need to perform the relevant calculations for each person, and compute the averages of these.