Hopefully, if you have landed on this post you have a basic idea of what the R-Squared statistic means. The R-Squared statistic is a number between 0 and 1, or, 0% and 100%, that quantifies the variance explained in a statistical model. Unfortunately, R Squared comes under many different names. It is the same thing as r-squared, R-square, the coefficient of determination, variance explained, the squared correlation, r2, and R2.

We get quite a few questions about its interpretation from users of Q and Displayr, so I am taking the opportunity to answer the most common questions as a series of tips for using R2. Read on to find out more about using R-Squared to work out overall fit, why it's a good idea to plot the data when interpreting R-Squared, how to interpret R-Squared values and why you should not use R-Squared to compare models.

1. Don't conclude a model is "good" based on the R-squared

The basic mistake that people make with R-squared is to try and work out if a model is "good" or not, based on its value. There are two flavors of this question:

- "My R-Squared is 75%. Is that good?"

- "My R-Squared is only 20%; I was told that it needs to be 90%".

The problem with both of these questions it that it is just a bit silly to work out if a model is good or not based on the value of the R-Squared statistic. Sure it would be great if you could check a model by looking at its R-Squared, but it makes no sense to do so. Most of the rest of the post explains why.

I will point out a caveat to this tip. It is pretty common to develop rules of thumb. For example, in driver analysis, models often have R-Squared values of around 0.20 to 0.40. But, keep in mind, that even if you are doing a driver analysis, having an R-Squared in this range, or better, does not make the model valid. Read on to find out more about how to interpret R Squared.

2. Use R-Squared to work out overall fit

Sometimes people take point 1 a bit further, and suggest that R-Squared is always bad. Or, that it is bad for special types of models (e.g., don't use R-Squared for non-linear models). This is a case of throwing the baby out with the bath water. There are quite a few caveats, but as a general statistic for summarizing the strength of a relationship, R-Squared is awesome. All else being equal, a model that explained 95% of the variance is likely to be a whole lot better than one that explains 5% of the variance, and likely will produce much, much better predictions.

Of course, often all is not equal, so read on.

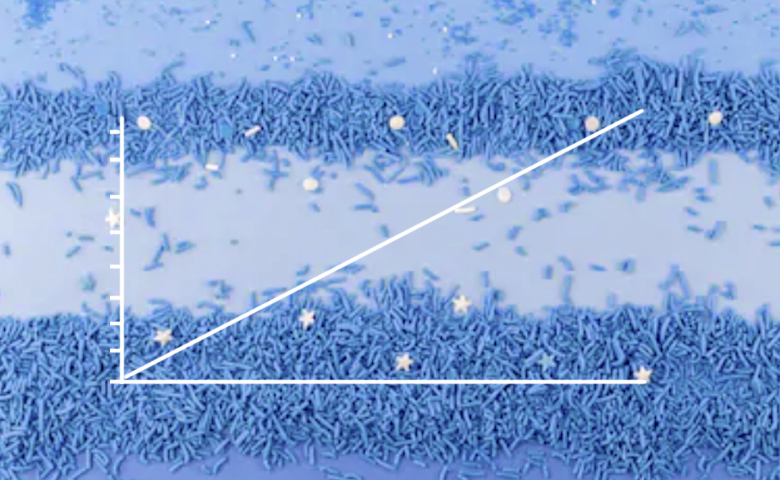

3. Plot the data

When interpreting the R-Squared it is almost always a good idea to plot the data. That is, create a plot of the observed data and the predicted values of the data. This can reveal situations where R-Squared is highly misleading. For example, if the observed and predicted values do not appear as a cloud formed around a straight line, then the R-Squared, and the model itself, will be misleading. Similarly, outliers can make the R-Squared statistic be exaggerated or be much smaller than is appropriate to describe the overall pattern in the data.

4. Be very afraid if you see a value of 0.9 or more

In 25 years of building models, of everything from retail IPOs through to drug testing, I have never seen a good model with an R-Squared of more than 0.9. Such high values always mean that something is wrong, usually seriously wrong.

5. Take context into account

What is a good R-Squared value? Well, you need to take context into account. There are a lot of different factors that can cause the value to be high or low. This makes it dangerous to conclude that a model is good or bad based solely on the value of R-Squared. For example:

- When your predictor or outcome variables are categorical (e.g., rating scales) or counts, the R-Squared will typically be lower than with truly numeric data.

- The more true noise in the data, the lower the R-Squared. For example, if building models based on stated preferences of people, there is a lot of noise so a high R-Squared is hard to achieve. By contrast, models of astronomical phenomena are the other way around.

- When you have more observations, the R-Squared gets lower.

- When you have more predictor variables, the R-Squared gets higher (this is offset by the previous point; the lower the ratio of observations to predictor variables, the higher the R-Squared).

- If your data is not a simple random sample the R-Squared can be inflated. For example, consider models based on time series data or geographic data. These are rarely simple random samples, and tend to get much higher R-Squared statistics.

- When your model excludes variables that are obviously important, the R-Squared will necessarily be small. For example, if you have a model looking at how brand imagery drives brand preference, and your model ignores practical things like price, distribution, flavor, and quality, the R-Squared is inevitably going to be small even if your model is great.

- Models based on aggregated data (e.g., state-level data) have much higher R-Squared statistics than those based on case-level data.

6. Think long and hard about causality

For the R-Squared to have any meaning at all in the vast majority of applications it is important that the model says something useful about causality. Consider, for example, a model that predicts adults' height based on their weight and gets an R-Squared of 0.49. Is such a model meaningful? It depends on the context. But, for most contexts the model is unlikely to be useful. The implication, that if we get adults to eat more they will get taller, is rarely true.

But, consider a model that predicts tomorrow's exchange rate and has an R-Squared of 0.01. If the model is sensible in terms of its causal assumptions, then there is a good chance that this model is accurate enough to make its owner very rich.

7. Don't use R-Squared to compare models

A natural thing to do is to compare models based on their R-Squared statistics. If one model has a higher R-Squared value, surely it is better? This is, as a pretty general rule, an awful idea. There are two different reasons for this:

- In many situations the R-Squared is misleading when compared across models. Examples include comparing a model based on aggregated data with one based on disaggregate data, or models where the variables are being transformed.

- Even in situations where the R-Squared may be meaningful, there are always better tools for comparing models. These include F-Tests, Bayes' Factors, Information Criteria, and out-of-sample predictive accuracy.

8. Don't interpret pseudo R-Squared statistics as explaining variance

Technically, R-Squared is only valid for linear models with numeric data. While I find it useful for lots of other types of models, it is rare to see it reported for models using categorical outcome variables (e.g., logit models). Many pseudo R-squared models have been developed for such purposes (e.g., McFadden's Rho, Cox & Snell). These are designed to mimic R-Squared in that 0 means a bad model and 1 means a great model. However, they are fundamentally different from R-Squared in that they do not indicate the variance explained by a model. For example, if McFadden's Rho is 50%, even with linear data, this does not mean that it explains 50% of the variance. No such interpretation is possible. In particular, many of these statistics can never ever get to a value of 1.0, even if the model is "perfect".

Find and share the stories in your data with Displayr

Sign up now