Gradient Boosting Explained – The Coolest Kid on The Machine Learning Block

What is gradient boosting?

Gradient boosting is a technique attracting attention for its prediction speed and accuracy, especially with large and complex data.

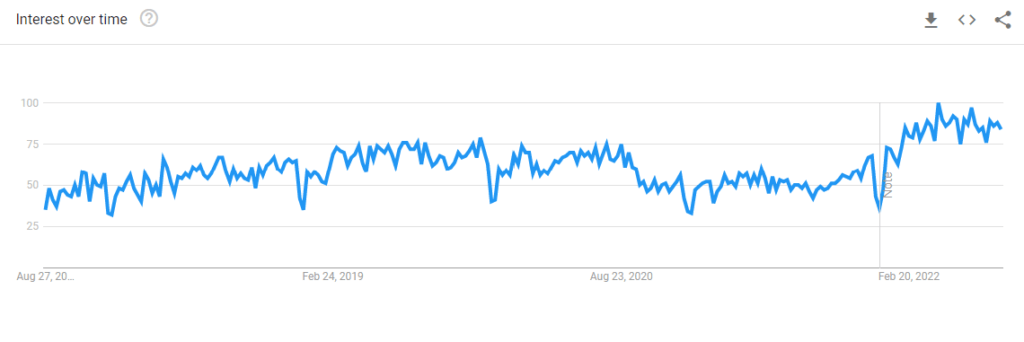

Don't just take my word for it, the chart below shows the rapid growth of Google searches for xgboost (the most popular gradient boosting R package). From data science competitions to machine learning solutions for business, gradient boosting has produced best-in-class results.

In this blog post I describe what is gradient boosting and how to use gradient boosting.

Try your own gradient boosting

Ensembles and boosting

Machine learning models can be fitted to data individually, or combined in an ensemble. An ensemble is a combination of simple individual models that together create a more powerful new model.

Machine learning boosting is a method for creating an ensemble. It starts by fitting an initial model (e.g. a tree or linear regression) to the data. Then a second model is built that focuses on accurately predicting the cases where the first model performs poorly. The combination of these two models is expected to be better than either model alone. Then you repeat this process of boosting many times. Each successive model attempts to correct for the shortcomings of the combined boosted ensemble of all previous models.

Try your own gradient boosting

Gradient boosting explained

Gradient boosting is a type of machine learning boosting. It relies on the intuition that the best possible next model, when combined with previous models, minimizes the overall prediction error. The key idea is to set the target outcomes for this next model in order to minimize the error. How are the targets calculated? The target outcome for each case in the data depends on how much changing that case's prediction impacts the overall prediction error:

- If a small change in the prediction for a case causes a large drop in error, then next target outcome of the case is a high value. Predictions from the new model that are close to its targets will reduce the error.

- If a small change in the prediction for a case causes no change in error, then next target outcome of the case is zero. Changing this prediction does not decrease the error.

The name gradient boosting arises because target outcomes for each case are set based on the gradient of the error with respect to the prediction. Each new model takes a step in the direction that minimizes prediction error, in the space of possible predictions for each training case.

Try your own gradient boosting

Time for a drink

Maybe that explanation was a bit heavy, so let's relax. To be more specific, how about a glass of wine? The data set I will be using to illustrate Gradient Boosting describes nearly 5000 Portuguese white wines (described here).

I will use quality as the target outcome variable. Quality is the median of at least 3 evaluations made by wine experts, on a scale from 1 to 10. This histogram below shows its distribution.

I use all 11 other variables for the predictors. These describe the chemical composition of the wine. Fortunately, they are all numeric, otherwise, they would have to be converted to numeric as required by xgboost.

As a preparatory step, I split the data into a 70% training set and a 30% testing set. This allows me to fit the model to the training set. Then I can independently assess the performance of the model with the testing set.

Machine learning boosting is most often done with an underlying tree model, although a linear regression as also available as an option in xgboost. In this case, I use the tree and plot the predictor importance scores below. The scores tell us that the alcohol content is by far the most important predictor of quality, followed by the volatile acidity.

Try your own gradient boosting

Performance evaluation

Because of the possibility of overfitting, the prediction accuracy on the training data is of little use. So let's find the accuracy of this model on the test data.

The table below illustrates the overlap between the categories predicted by the model and the original category of each case within the testing sample. The overall accuracy, shown beneath the chart, is 64.74%.

However, there is one more trick to enhance this. xgboost uses various parameters to control the boosting, e.g. maximum tree depth. I automatically try many combinations of these parameters and select the best by cross-validation. This takes more time to run, but accuracy on the testing sample increases to 65.69%.

Try your own gradient boosting

How good is this model? For comparison, I'll repeat the analysis with CART instead of gradient boosting. The accuracy is much lower, at around 50%. Since alcohol is used for the first split of the tree, the resulting Sankey diagram below agrees with the boosting on the most important variable. So next time you buy the most alcoholic bottle of wine, you can tell yourself it's really quality you are buying!

The examples in this post use Displayr as a front-end to running the R code. If you go into our example document, you can see the outputs for yourself. The code that has been used to generate each of the outputs is accessible by selecting the output and clicking Properties > R CODE on the right-hand side of the screen. The heavy-lifting is done with the xgboost package, via our own package flipMultivariates (available on GitHub), and the prediction-accuracy tables are found in our own flipRegression (also available on GitHub).

Feature Image By Kirsten Hartsoch [2] - [1], CC BY 2.0, Link

We hope you've improved your understanding of gradient boosting.

To quickly try your own gradient boosting in Displayr, sign up below.