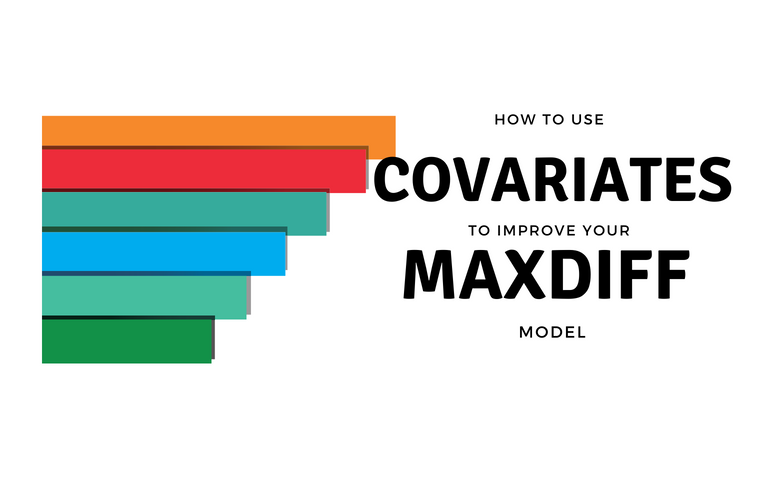

Ready to improve the accuracy of your MaxDiff model? Today, I'll explain why you'll want to include covariates in your model and how to include them in your MaxDiff analyses using Hierarchical Bayes. I'll walk you through an example that investigates the qualities voters want in a U.S. president.

Create your own MaxDiff Design

MaxDiff is a type of best-worst scaling. Respondents are asked to compare all choices in a given set and pick their best and worse (or most and least favorite). For an introduction, check out this great webinar by Tim Bock. In our post, we'll discuss why you may want to include covariates in the first place and how they can be included in Hierarchical Bayes (HB) MaxDiff. Then we'll use the approach to examine the qualities voters look for in a U.S. president.

Why include respondent-specific covariates?

Advances in computing have made it simple to include complex respondent-specific covariates in HB MaxDiff models. There are several reasons why we may want to do this in practice.

- A standard model which assumes each respondent's part-worth is drawn from the same normal distribution may be too simplistic. Information drawn from additional covariates may improve the estimates of the part-worths. This is likely to be the case for surveys in which there were fewer questions and therefore less information.

- Additionally, when respondents are segmented, we may be worried that the estimates for one segment are biased. Another concern is that HB may shrink the segment means overly close to each other. This is especially problematic if sample sizes vary greatly between segments.

How to include covariates in the model

In the usual HB model, we model the part-worths for the ith respondent as βi ~ N(μ, ∑). Note that the mean and covariance parameters μ and ∑ do not depend on i and are the same for each respondent in the population. The simplest way to include respondent-specific covariates in the model is to modify μ to be dependent on the respondent's covariates.

We do this by modifying the model for the part-worths to βi ~N(Θxi, ∑) where xi is a vector of known covariate values for the ith respondent and Θ is a matrix of unknown regression coefficients. Each row of Θ is given a multivariate normal prior. The covariance matrix, ∑, is re-expressed into two parts: a correlation matrix and a vector of scales, and each part receives its own prior distribution.

Fitting covariates in Q and Displayr

This model can be fit in Q and Displayr, which uses the No-U-Turn sampler from stan - the state-of-the-art software for fitting Bayesian models. The package allows us to quickly and efficiently estimate our model without having to worry about selecting the tuning parameters that are frequently a major hassle in Bayesian computation and machine learning. The package also provides a number of features for visualizing the results and diagnosing any issues with the model fit.

Download our free MaxDiff ebook

Example in Displayr

The dataset

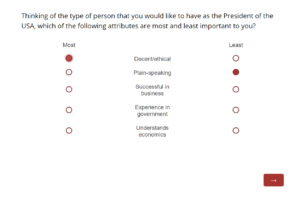

Our data set asked 315 Americans ten questions about the attributes they look for in a U.S. president. Each question asked the respondents to pick their most and least important attributes from a set of five. The attributes were:

- Decent/ethical

- Plain-speaking

- Healthy

- Successful in business

- Good in a crisis

- Experienced in government

- Concerned for the welfare of minorities

- Understands economics

- Concerned about global warming

- Concerned about poverty

- Has served in the military

- Multilingual

- Entertaining

- Male

- From a traditional American background

- Christian

For more information, please see this earlier blog post, which analyzes the same data using HB, but does not consider covariates.

Fitting your MaxDiff Model

In Displayr and Q, we can fit a MaxDiff model by selecting Marketing > MaxDiff > Hierarchical Bayes from the menu (Anything > Advanced Analysis in Displayr and Create in Q). See this earlier blog post for a description of the HB controls/inputs and a demo using a different data set. Documentation specific to the Displayr GUI is on the Q wiki.

We then included a single categorical predictor in the model - responses to the question of who they voted for in the 2016 election. The predictor had the following levels; voted for Clinton, voted for Trump, voted for another candidate, didn't vote and don't know or refused to answer.

We would expect this predictor to have a very strong correlation with the best and worse choices for each respondent. To compare the models with and without covariates in Displayr, first fit the model without covariates and then copy/paste the created R item.

To add the covariates, select them from the dropbox labeled "Covariates" under the section MODEL in the Object Inspector of your copied HB output.

Create your own MaxDiff Design

Checking convergence

We fit the models using 1000 iterations and eight Markov chains. When conducting a HB analysis, it is vital to check that the algorithm used has both converged to and adequately sampled from the posterior distribution. Using the HB diagnostics available in Displayr (see this post for a detailed overview), there appeared to be no issues with convergence for this data. We then assessed the performance of our models by leaving out one or more respondent questions and seeing how well we could predict their choice using the estimated model.

Results

If we only hold out one question for prediction and use the other nine questions to fit the models, the effect of the categorical predictor is small. The model with the categorical predictor takes longer to run for the same number of iterations due to the increased number of parameters. Both models have only a modest improvement in out-of-sample prediction accuracy (from 67.0% to 67.4%). We did not gain much from running the predictor because we could already draw substantial information from the nine MaxDiff questions.

Including fixed covariates becomes much more advantageous when you have less MaxDiff questions - like in the extreme example of only having two questions to fit the models. We see a larger improvement in out-of-sample prediction accuracy (from 54.5% to 55.0%). We also see a much higher effective sample size per second. This means that the algorithm is able to sample much more efficiently with the covariate included. Even more importantly, this saves us time as we don't need to use as many iterations to obtain our desired number of effective samples.

Download our free MaxDiff ebook

Ready to include your own covariates for analysis?